Azure Data Factory

Hybrid data integration at enterprise scale it made easy

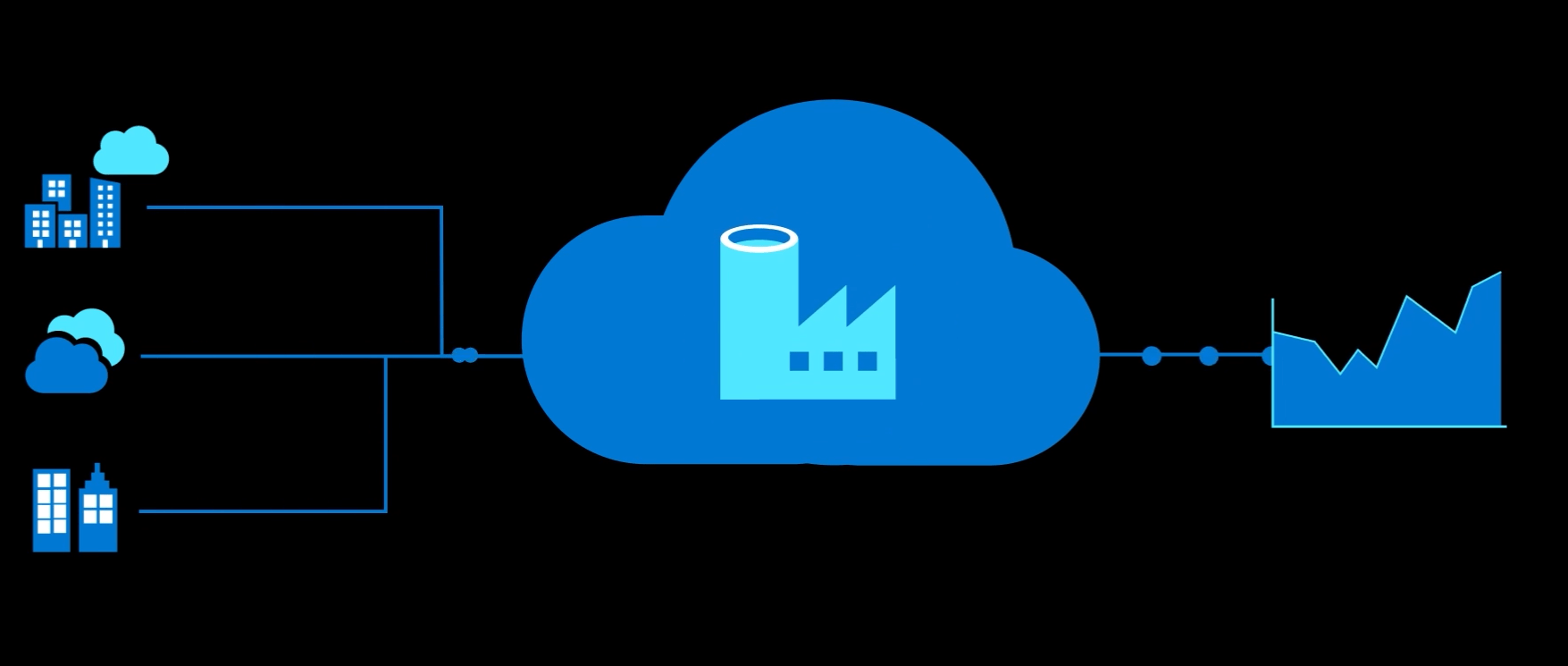

Azure data factory is a cloud-based data integration service that orchestrates and automates the movement and transformation of data.

Using azure data factory, you can create and schedule data-driven workflows ( pipelines) that can ingest data from disparate data stores.

It can process and transform the data by using compute services such as azure Hdinsights Hadoop, spark, azure data lake analytics, and azure machine learning.

It enables every organization in every industry to use it for a rich variety of use cases:

- Data Engineering

- Migrating their on-premises SSIS packages to Azure

- Operational data integration

- Analytics

- Ingesting data into data warehouses

How does it actually work?

The pipeline ( data-driven workflows) in azure data factory typically perform the following four steps:

- Connect and collect

- Transform & enrich

- Publish

- Monitor

Data factory concepts

- Pipeline: is a logical grouping of activities that performs a unit of work.

- Activity: it represents processing step in a pipeline.

- Linked services: Information needed to connect to external sources.

- Datasets: it represents data structures within the data stores and the linked services defines the connection to the data source.

- Integration runtimes: it defines the action that will be taken. An integration runtime shows us the connection between the linked services and the activity.

- Data flows: create and manage graphs of data transformation logic that you can use to transform any-size data.

What is Data lake?

Data lake is a very important part when it comes to azure data factory‘s proper functioning, so what is data lake exactly:

A highly scalable, distributed, parallel file system in the cloud specially designed to work with multiple analytics frameworks.

With Azure Data Factory, you can build complex workflows that bring together structured and unstructured data from a wide variety of sources and transform it to meet your analytic goals.